In a roundtable session with Ziad Asghar, Qualcomm’s SVP and General Manager of XR and Spatial Computing, I learned a lot about the chipmaker’s plans for the future of Extended Reality (XR), which is also closely tied to AI.

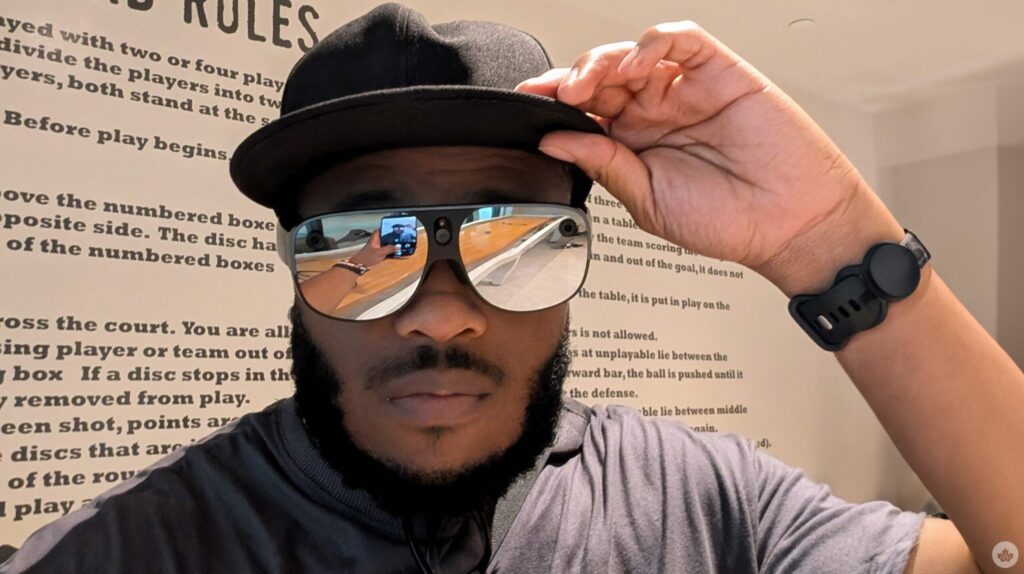

For instance, Asghar points to the rapid progress toward making AR glasses that look like regular eyeglasses, similar to my Qualcomm-powered Ray Ban Metas. Without giving too much away, Asghar predicts the arrival will be in the next year or two. Of course, Asghar couldn’t leak anything, but he said this with the confidence of knowing something that the rest of us don’t—and I found that incredibly exciting.

I’m a huge fan of fun AI implications, and XR and AI have such good synergy. As AI models improve, they can enhance XR experiences like text-based real-time language translation and object recognition.

We’re only one to two years away

Asghar painted a picture of what an XR/AI future might look like, such as a world where you wear XR glasses and enter a meeting with people around a conference table. Above each person’s head, you’ll see a display overlay showing some of their LinkedIn details, including their name, profession, and even their LinkedIn profile picture, and I would add that this would be a great place for pronouns as well.

To go even further, if everyone in the room is wearing these glasses, the glasses could provide real-time information as well. Perhaps subtitles when each person is speaking, a screen where graphs can be presented, and everyone in the room can edit or make changes—like a next-level Snap Spectacles experience.

Of course, there might be some issues with this, such as privacy, but in this theoretical world, you can opt in or out of having your personal information shown via XR. To go even further, perhaps you can block someone or have it so you’re not recognizable with these smart glasses, so that person shows as blurred out, and you can only see that person without your glasses on.

Asghar also brought up other use cases. The glasses could also help the visually impaired. You might need help reading the small print on a menu or a book or seeing something at a distance. Or what if you can’t remember where you put your phone or the book you were reading? The glasses could remind you where they were placed or give you directions. Further, an overlay could help you with Google Maps so you can know where you’re going without pulling out your phone. There are so many use cases, and Qualcomm seems to be working on making this all happen.

While I have AI glasses, which are becoming increasingly common, there has yet to be that big turning point where AR glasses are a compelling device to purchase. Asghar thinks that time is just around the corner with advancements in display technology, power efficiency and AI capabilities. Specifically, we have about one to two years before these products come to market.

When asked when we could expect a Samsung Galaxy S2-like turning point for XR glasses, Asghar said we could expect it within a year.

“I think the time horizon is within a year… We’re working, of course, with Google, and Samsung is their partner… Now, the use case you like, and I actually like a lot, too, is doable today. We have a very good neural engine in here. All you need to do is go to my LinkedIn profile, look at my contacts and do a quick face match with those. Grab that information, put it on my head and augment it. My point is that it’s doable today… So I think once the displays are there in the right form factor, which I’m telling you, in the next one to two years time, and multiple companies are working on it.”

Project Astra is closer than we think

The key points here are that Qualcomm mentioned that they are working with Google and that we’ll see something physical within the next year or two.

Project Astra is a prototype from @GoogleDeepMind exploring how a universal AI agent can be truly helpful in everyday life. Watch our prototype in action in two parts, each captured in a single take, in real time ↓ #GoogleIO pic.twitter.com/uMEjIJpsjO

— Google (@Google) May 14, 2024

Earlier this year, at Google I/O, the Mountain View tech giant showcased Project Astra and the future of AI assistants. Project Astra can interact with the world around it by taking in information, remembering what it sees, processing that information and understanding contextual details.

I think that sounds so much like what Asghar described, which makes me think that Qualcomm and Google might be working together on Project Astra glasses that we could see in the next year or two.

Qualcomm is working with plenty of companies such as Google, Meta, Snap, Sony and many more. This future isn’t too far away, and I’m incredibly excited. As much as I love smartphones, smartglasses are becoming increasingly interesting for me. I really liked my quick experience with the Snap Spectacles, and I’ve owned both iterations of the Meta Ray-Bans.

Keep following MobileSyrup for XR and AI features, news and more.

MobileSyrup may earn a commission from purchases made via our links, which helps fund the journalism we provide free on our website. These links do not influence our editorial content. Support us here.